AssistantBench evaluates the ability of web agents to automatically solve realistic and time-consuming tasks. The benchmark includes 214 tasks covering multiple domains from more than 525 pages from 258 different websites. Please check out our paper for more details.

As tasks in AssistantBench require planning the execution and transferring information between steps, we introduce a new web agent built to tackle tasks in AssistantBench by equipping SeeAct with specialized planning and memory components.

Even though SPA outperforms previous agents, AssistantBench is challenging for current benchmarks, with the best performance currently at 25.2%.

| Model | Accuracy | Answer rate | Precision | Exact match |

|---|---|---|---|---|

| SPA (ours) → Closed-book | 25.2 | 91.7 | 27.5 | 9.9 |

| SeeAct → Closed-book | 23.4 | 89.5 | 26.1 | 9.4 |

| Closed-book LM (1-shot) | 22.2 | 89.5 | 24.8 | 8.3 |

| Retrieval-augmented LM (1-shot) → CB | 19.5 | 92.8 | 21.0 | 6.1 |

| Retrieval-augmented LM (0-shot) → CB | 18.7 | 93.9 | 19.9 | 6.6 |

| Closed-book LM (0-shot) | 16.5 | 53.6 | 30.7 | 6.1 |

| Retrieval-augmented LM (0-shot) | 11.8 | 60.2 | 19.5 | 5.5 |

| SPA (ours) | 11.1 | 35.9 | 30.9 | 5.5 |

| Retrieval-augmented LM (1-shot) | 10.7 | 48.1 | 22.4 | 3.9 |

| SeeAct | 4.1 | 15.5 | 26.3 | 2.2 |

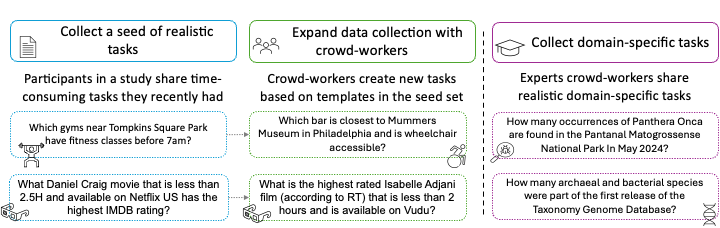

To create AssistantBench, we first collected a seed set by asking participants in a study to share time-consuming web tasks they recently had. We expanded the seed set by showing tasks from as templates for crowd-workers. Finally, domain-experts shared domain-specific tasks to increase diversity.

To get started with AssistantBench, simply download our HuggingFace dataset. We provide a development set with task answers, URLs, and explanations. We keep the test set answers hidden for now and provide the option to submit predictions via our HuggingFace portal.

BibTeX

@misc{yoran2024assistantbenchwebagentssolve,

title={AssistantBench: Can Web Agents Solve Realistic and Time-Consuming Tasks?},

author={Ori Yoran and Samuel Joseph Amouyal and Chaitanya Malaviya and Ben Bogin and Ofir Press and Jonathan Berant},

year={2024},

eprint={2407.15711},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2407.15711},

}